TL,DL: The post discusses the impact of AI on productivity, particularly through the emergence of AI PCs powered by localized edge AI. It highlights how large language models and the Core Ultra processor enable AI PCs to handle diverse tasks efficiently and securely. The article also touches on the practical applications and benefits of AI PCs in various fields. The comprehensive overview emphasizes the transformative potential of AI PCs and their pivotal role in shaping the future of computing.

Translation from the Source: AI PC 是噱头还是更快的马车?

Is AI a Bubble or a Marketing Gimmick?

Since 2023, everyone has known that AI is very hot, very powerful, and almost magical. It can generate articles with elegant language and write comprehensive reports, easily surpassing 80% or even more of human output. As for text-to-image generation, music composition, and even videos, there are often impressive results. There’s no need to elaborate on its hype…

For professions like designers and copywriters, generative AI has indeed helped them speed up the creative process, eliminating the need to start from scratch. Due to its high efficiency, some people in these positions might even face the worry of losing their jobs. But for ordinary people, aside from being a novelty, AI tools like OpenAI and Stable Diffusion don’t seem to provide much practical help for their work. After all, most people don’t need to write well-structured articles or compose poems regularly. Moreover, after seeing many AI outputs, they often feel that they are mostly correct but useless information—helpful, but not very impactful.

So, when a phone manufacturer says it will no longer produce “traditional phones,” people scoff. When the concept of an AI PC emerges, it’s hard not to see it as a marketing gimmick. However, after walking around the exhibition area at Intel’s 2024 commercial client AI PC product launch, I found AI to be more useful than I imagined. Yes, useful—not needing to be breathtaking, but very useful.

The fundamental change in experience brought by localized edge AI

Since it is a commercial PC, it cannot be separated from the productivity tool attribute. If you don’t buy the latest hardware and can’t run the latest software versions, it’s easy to be labeled as having “low application skills.” Take Excel as an example. The early understanding of efficiency in Excel was using formulas for automatic calculations. Later, it was about macro code for automatic data filtering, sorting, exporting, etc., though this was quite difficult. A few years ago, learning Python seemed to be the trend, and without it, one was not considered competent in data processing. Nowadays, with data visualization being the buzzword, most Excel users have to search for tutorials online and learn on the spot for unfamiliar formulas. Complex operations often require repeated attempts.

So, can adding “AI” to a PC or installing an AI assistant make it trendy? After experiencing it firsthand, I can confirm that the AI PC is far from superficial. There is a company called ExtendOffice, specializing in Office plugins, which effectively solves the pain points of using Excel awkwardly: you just state your intention, and the AI assistant directly performs operations on the Excel sheet, such as currency conversion or encrypting a column of data. There’s no need to figure out which formula or function corresponds to your needs, no need to search for tutorials, and it skips the step-by-step learning process—the AI assistant handles it immediately.

This highlights a particularly critical selling point of the AI PC: localization, and based on that, it can be embedded into workflows and directly participate in processing. We Chinese particularly love learning, always saying “teaching someone to fish is better than giving them a fish,” but the learning curve for fishing is too long. In an AI PC, you can get both the fish and the fishing skills because the fisherman (AI assistant) is always in front of you, not to mention it can also act as a chef or secretary.

Moreover, the “embedding” mentioned earlier is not limited to a specific operation (like adding a column of data or a formula to Excel). It can generate multi-step, cross-software operations. This demonstrates the advantage of large language models: they can accept longer inputs, understand, and break them down. For example, we can tell the AI PC: “Mute the computer, then open the last read document and send it to a certain email.” Notably, as per the current demonstration, there is no need to specify the exact document name; vague instructions are understandable. Another operation that pleasantly surprised me was batch renaming files. In Windows, batch renaming files requires some small techniques and can only change them into regular names (numbers, letter suffixes, etc.). But with the help of an AI assistant, we can make file names more personalized: adding relevant customer names, different styles, etc. This seemingly simple task actually involves looking at each file, extracting key information, and even describing some abstract information based on self-understanding, then individually writing new file names—a very tedious process that becomes time-consuming with many files. With the AI assistant, it’s just a matter of saying a sentence. Understanding longer contexts, multi-modal inputs, etc., all rely on the capabilities of large language models, but this is running locally, not relying on cloud inference. Honestly, no one would think that organizing file names in the local file system requires going to the cloud, right? The hidden breaks between the edge and the cloud indeed limit our imagination, so these local operations of the AI PC really opened my mind.

Compared to the early familiar cloud-based AI tools, localization brings many obvious benefits. For instance, even when offline, natural language processing and other operations can be completed. For those early users who heavily relied on large models and encountered service failures, “the sky is falling” was a pain point. Not to mention scenarios without internet, like on a plane, maintaining continuous availability is a basic need.

Local deployment can also address data security issues. Since the rise of large models, there have been frequent news of companies accidentally leaking data. Using ChatGPT for presentations, code reviews, etc., is great, but it requires uploading documents to the cloud. This has led many companies to outright ban employees from using ChatGPT. Subsequently, many companies chose to train and fine-tune private large models using open-source models and internal data, deploying them on their own servers or cloud hosts. Furthermore, we now see that a large model with 20 billion parameters can be deployed on an AI PC based on the Core Ultra processor.

These large models deployed on AI PCs have already been applied in various vertical fields such as education, law, and medicine, generating knowledge graphs, contracts, legal opinions, and more. For example, inputting a case into ThunderSoft’s Cube intelligent legal assistant can analyze the case, find relevant legal provisions, draft legal documents, etc. In this scenario, the privacy of the case should be absolutely guaranteed, and lawyers wouldn’t dare transmit such documents to the cloud for processing. Doctors have similar constraints. For research based on medical cases and genetic data, conducting genetic target and pharmacological analyses on a PC eliminates the need to purchase servers or deploy private clouds.

Incidentally, the large model on the AI PC also makes training simpler than imagined. Feeding the local files visible to you into the AI assistant can solve the problem of “correct nonsense” that previous chatbots often produced. For example, generating a quote email template with AI is easy, but it’s normal for a robot to not understand key information like prices, which requires human refinement. If a person handles this, preparing a price list in advance is a reasonable requirement, right? Price lists and FAQs need to be summarized and refined, then used to train newcomers more effectively—that’s the traditional view. Local AI makes this simple: let it read the Outlook mailbox, and it will learn the corresponding quotes from historical emails. The generated emails won’t just be template-level but will be complete with key elements. Our job will be to confirm whether the AI’s output is correct. And these learning outcomes can be inherited.

Three Major AI Engines Support Local Large Models

In the information age, we have experienced several major technological transformations. First was the popularization of personal computers, then the internet, and then mobile internet. Now we are facing the empowerment and even restructuring of productivity by AI. The AI we discuss today is not large-scale clusters for training or inference in data centers but the PCs at our fingertips. AIGC, video production, and other applications for content creators have already continuously amazed the public. Now we further see that AI PCs can truly enhance the work efficiency of ordinary office workers: handling trivial tasks, making presentations, writing emails, finding legal provisions, etc., and seamlessly filling in some of our skill gaps, such as using unfamiliar Excel functions, creating supposedly sophisticated knowledge graphs, and so on. All this relies not only on the “intelligent emergence” of large language models but also on sufficiently powerful performance to support local deployment.

We frequently mention the “local deployment” of large models, which relies on strong AI computing power at the edge. The so-called AI PC relies on the powerful CPU+GPU+NPU triad AI engines of the Core Ultra processor, whose computing power is sufficient to support the local operation of a large language model with 20 billion parameters. As for AIGC applications represented by text-to-image generation, they are relatively easy.

Fast CPU Response: The CPU can be used to run traditional, diverse workloads and achieve low latency. The Core Ultra adopts advanced Intel 4 manufacturing process, allowing laptops to have up to 16 cores and 22 threads, with a turbo frequency of up to 5.1GHz.

High GPU Throughput: The GPU is ideal for large workloads that require parallel throughput. The Core Ultra comes standard with Arc GPU integrated graphics. The Core Ultra 7 165H includes 8 Xe-LPG cores (128 vector engines), and the Core Ultra 5 125H includes 7. Moreover, this generation of integrated graphics supports AV1 hardware encoding, enabling faster output of high-quality, high-compression-rate videos. With its leading encoding and decoding capabilities, the Arc GPU has indeed built a good reputation in the video editing industry. With a substantial increase in vector engine capabilities, many content creation ISVs have demonstrated higher efficiency in smart keying, frame interpolation, and other functions based on AI PCs.

Efficient NPU: The newly introduced NPU (Neural Processing Unit) in the Core Ultra provides 10 times the efficiency of traditional CPUs and GPUs in processing AI workloads. As an AI acceleration engine, it allows the NPU to handle high-complexity, high-demand AI workloads, greatly reducing energy consumption.

Edge AI has unlimited possibilities, and its greatest value is precisely in practicality. With sufficient computing power, whether through large-scale language models or other models, it can indeed increase the efficiency of content production and indirectly enhance the operational efficiency of every office worker.

For commercial AI PCs, Intel has also launched the vPro® platform based on Intel® Core™ Ultra, which organically combines AI with the productivity, security, manageability, and stability of the commercial platform. Broadcom demonstrated that vPro-based AI PC intelligent management transforms traditional asset management from passive to proactive: previously, it was only possible to see whether devices were “still there” and “usable,” and operations like patch upgrades were planned; with AI-enhanced vPro, it can autonomously analyze device operation, identify potential issues, automatically match corresponding patch packages, and push suggestions to maintenance personnel. Beirui’s Sunflower has an AI intelligent remote control report solution, where remote monitoring of PCs is no longer just screen recording and capturing but can automatically and in real-time identify and generate remote work records of the computer, including marking sensitive operations such as file deletion and entering specific commands. This significantly reduces the workload of maintenance personnel in checking and tracing records.

The Future is Here: Hundreds of ISVs Realizing Actual Business Applications Henry Ford once commented on the invention of the automobile: “If you ask your customers what they need, they will say they need a faster horse.”

“A faster horse” is a consumer trap. People who think AI phones and AI PCs are just gimmicks might temporarily not see the need to upgrade their horse based on convention. More deeply, the public has some misunderstandings about the implementation of AI, which manifests in two extremes: one extreme thinks it’s something for avant-garde heavy users and flagship configurations, typically in scenarios like image and video processing; the other extreme sees it as refreshing chatbots, like an enhanced search engine, useful but not necessary. In reality, the implementation of AI PCs far exceeds the imagination of many people: for commercial customers, Intel has deeply optimized cooperation with more than 100 ISVs worldwide, and over 35 local ISVs have optimized integration at the terminal, creating a huge AI ecosystem with over 300 ISV features, bringing an unprecedented AI PC experience!

Moreover, I do not think this scale of AI application realization is pie in the sky or “fighting the future.” Because in my eyes, the display of numerous AI PC solutions is like an “OpenVINO™ party.” OpenVINO™ is a cross-platform deep learning toolkit developed by Intel, meaning “Open Visual Inference and Neural Network Optimization.” This toolkit was actually released in 2018, and over the years, it has accumulated a large number of computer vision and deep learning inference applications. By the time of the Iris Xe integrated graphics era, the software and hardware combination already had a strong reputation. For example, relying on a mature algorithm store, various AI applications can be easily built on the 11th generation Core platform, from behavior detection for smart security to automatic inventory checking in stores, with quite good results. Now, as AI PC integrated graphics evolve to Xe-LPG, with doubled computing power, the various applications accumulated by OpenVINO™ will perform even better, achieving the “location” (sustainable Xe engine) and “harmony” (ISV resources of OpenVINO™) that are already in place.

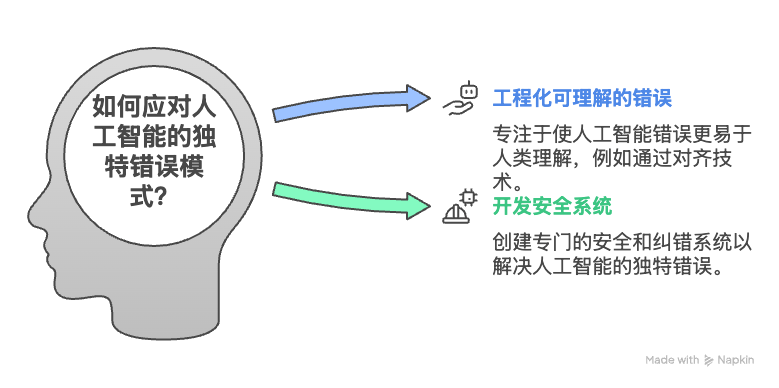

What truly ignites the AI PC is “timing,” namely, the practicalization of large language models. The breakthrough of large language models has effectively solved the problems of natural language interaction and data training, greatly lowering the threshold for ordinary users to utilize AI computing power. Earlier, I cited many examples embedded in office applications. Here, I can give another example: the combination of Kodong Intelligent Controller’s multimodal visual language model with a robotic arm. The robotic arm is a common robot application, which has long been able to perform various operations with machine vision, such as moving and sorting objects. However, traditionally, object recognition and operation require pre-training and programming. With the integration of large language models, the whole system can perform multimodal instruction recognition and execution. For instance, we can say: “Put the phone on that piece of paper.” In this scenario, we no longer need to teach the robot what a phone is, what paper is, do not need to give specific coordinates, and do not need to plan the moving path. Natural language instructions and camera images are well integrated, and execution instructions for the robotic arm are generated automatically. For such industrial scenarios, the entire process can be completed on a laptop-level computing platform, and the data does not need to leave the factory.

Therefore, what AI PC brings us is definitely not just “a faster horse,” but it subverts the way PCs are used and expands the boundaries of user capabilities. Summarizing the existing ISVs and solutions, we can categorize AI PC applications into six major scenarios:

- AI Chatbot: More professional Q&A for specific industries and fields.

- AI PC Assistant: Directly operates the PC, handling personal files, photos, videos, etc.

- AI Office Assistant: Office plugins to enhance office software usage efficiency.

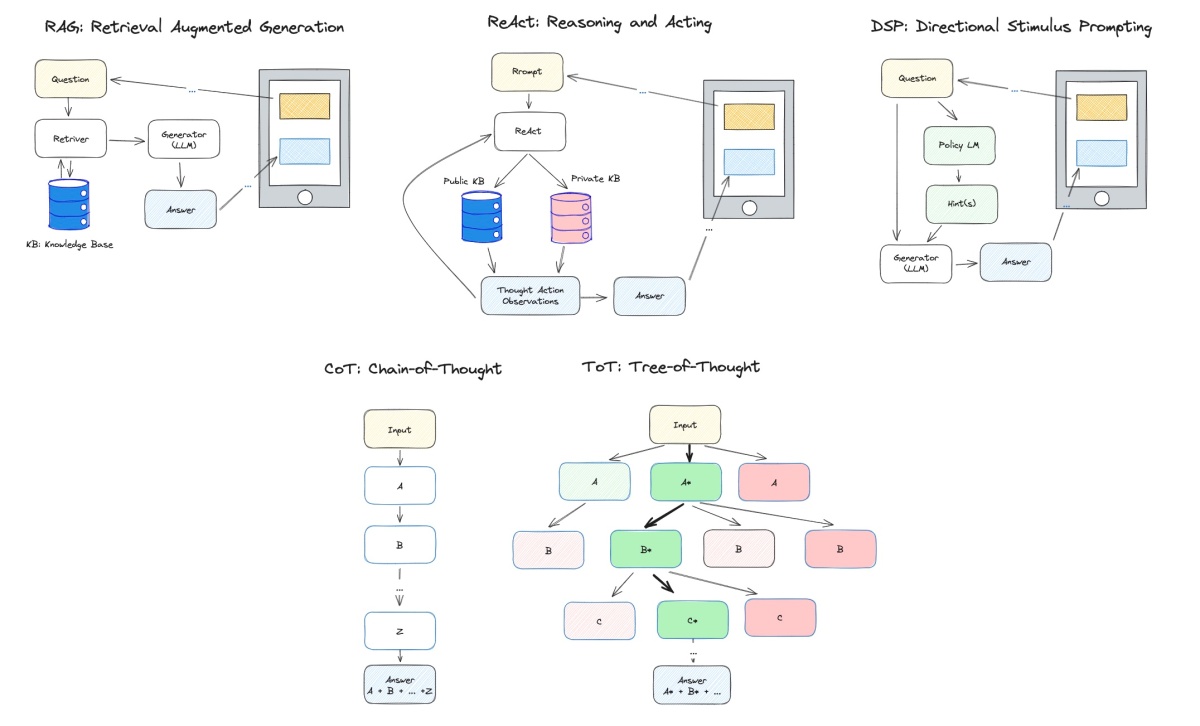

- AI Local Knowledge Base: RAG (Retrieval Augmented Generation) applications, including various text and video files.

- AI Image and Video Processing: Generation and post-processing of multimedia information such as images, videos, and audio.

- AI PC Management: More intelligent and efficient device asset and security management.

Summary

It is undeniable that the development of AI always relies on the technological innovation and combination of hardware and software. AI PCs based on Core Ultra are first of all faster, stronger, lower power consumption, and longer battery life PCs. These hardware features support AI applications that bring deeper changes to our usage experience and modes. PCs empowered with “intelligent emergence” are no longer just productivity tools; in some scenarios, they can directly transform into collaborators or even operators. Behind this are performance improvements brought by microarchitecture and production process advancements, as well as the empowerment of new productivity like large language models.

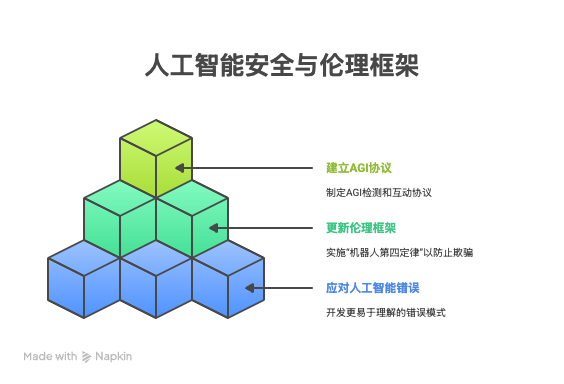

If we regard CPU, GPU, and NPU as the three major computing powers of AI PCs, correspondingly, the value of AI PCs for localizing AI (on the client side) can be summarized into three major rules: economy, physics, and data confidentiality. The so-called economy means that processing data locally can reduce cloud service costs and optimize economic efficiency; physics corresponds to the “virtual” nature of cloud resources, where local AI services can provide better timeliness, higher accuracy, and avoid transmission bottlenecks between the cloud and the client; data confidentiality means that user data stays completely local, preventing misuse and leakage.

In 2023, the rapid advancement of large language models achieved the AI era in the cloud. In 2024, the client-side implementation of large language models ushered in the AI PC era. We also look forward to AI continuously solidifying applications in the intertwined development of the cloud and the client, continuously releasing powerful productivity; and we look forward to Intel jointly advancing with ISV+OEM in the future to provide us with even stronger “new productivity.”

AI 是虚火还是营销噱头?

2023 年以来,所有人都知道 AI 非常的热、非常的牛、非常的神,生成的文章辞藻华丽、写的报告面面俱到,毫不谦虚地说,打败 80% 甚至更多的人类。至于文生图、作曲,甚至是视频,都常有令人惊艳的作品。吹爆再吹爆,无需赘述……

对于设计师、文案策划等职业,生成式 AI 确实已经帮助他们提高了迸发创意的速度,至少不必万丈高楼平地起了。由于效率太高,这些岗位中的部分人可能反而要面对失业的烦恼。但对于普通人,AI 除了猎奇,OpenAI、SD 等时髦玩意儿好像对工作也没啥实质性的帮助——毕竟平时不需要写什么四平八稳的文章,更不需要吟诗作赋,而且见多了 AI 的输出,也实在觉得多是些正确的废话,有用,但也没啥大用。

所以,当某手机厂商说以后不生产“传统手机”的时候,大家嗤之以鼻。当 AI PC 概念出现的时候,也难免觉得是营销噱头。但是,当我在 2024 英特尔商用客户端 AI PC 产品发布会的展区走了一圈之后,我发现 AI 比我想象中的更有用。是的,有用,不需要技惊四座,但,很有用。

端侧 AI 的本地化落地带来根本性的体验变化

既然是商用 PC,那就离不开生产力工具属性。如果不买最新的硬件,玩不转最新的软件版本,很容易在鄙视链中打上“应用水平低下”的标签。就拿 Excel 为例吧,最早接触 Excel 的时候,对效率的理解是会用公式,自动进行一些计算等。再然后,是宏代码,自动执行数据的筛选、排序、导出等等,但这个难度还是比较大的。前几年呢,又似乎流行起了 Python,不去学一下那都不配谈数据处理了。在言必称数据可视化的当下,多数 Excel 用户的真实情况是尝试陌生的公式都需要临时百度一下教程,现学现用,稍复杂的操作可能要屡败屡试。

那 PC 前面加上 “AI”,或者装上某个 AI 助理,就可以赶时髦了吗?我实际体验之后,确定 AI PC 绝非如此浅薄。在 AI PC 上,有个专门做 Office 插件的公司叫 ExtendOffice,就很好地解决了 Excel 用起来磕磕绊绊的痛点:你只要说出你的意图,AI 助手马上直接在 Excel 表格上进行操作,譬如币值转换,甚至加密某一列数据。不需要去琢磨脑海里的需求到底需要对应哪个公式或者功能才可以实现,不用去查找教程,也跳过了 step by step 的学习,AI 助手当场就处理完了。

这就体现了 AI PC 一个特别关键的卖点:本地化,且在此基础上,可以嵌入工作流程,直接参与处理。我们中国人特别热爱学习,总说“授人以鱼不如授人以渔”,但“渔”的学习曲线太长了。在 AI PC 里,鱼和渔可以同时获得,因为渔夫(AI 助手)随时都在你眼前,更不要说它还可以当厨师、当秘书。

而且,刚才说的“嵌入”并不局限于某一个操作环节(类似于刚才说的给 Excel 增加某一列数据、公式),而是可以生成一个多步骤的、跨软件的操作。这也体现了大语言模型的优势:可以接受较长的输入并理解、分拆。譬如,我们完全可以对 AI PC 说:帮我将电脑静音,然后打开上次阅读的文档,并把它发送给某某邮箱。需要强调的是,以目前的演示,不需要指定准确的文档名,模糊的指示是可以理解的。还有一个让我暗暗叫好的操作是批量修改文件名。在 Windows 下批量修改文件名是需要一些小技巧的,而且,只能改成有规律的文件名(数字、字母后缀)等,但在 AI 助手的帮助下,我们可以让文件名更有个性:分别加上相关客户的名字、不同的风格类型等等。这事说起来简单,但其实需要挨个查看文件、提取关键信息,甚至根据自我理解去描述一些抽象的信息,然后挨个编写新的文件名——过程非常琐碎,文件多了就很费时间,但有了 AI 助手,这就是一句话的事。理解较长的上下文、多模态输入等等,这些都必须依赖大语言模型的能力,但其实是在本地运行的,而非借助云端的推理能力。讲真,应该没有人会认为整理文件名这种本地文件系统的操作还需要去云端绕一圈吧?从端到云之间隐藏的各种断点确实限制了我们的想象力,因此,AI PC 的这些本地操作真的打开了我的思路。

相对于大家早期较为熟悉的基于云端的 AI 工具,本地化还带来了很多显而易见的好处。譬如,断网的情况下,也是可以完成自然语言的处理和其他的操作。这对于那些曾经重度依赖大模型能力,且遭遇过服务故障的早期大模型用户而言,“天塌了”就是痛点。更不要说坐飞机之类的无网络场景了,保持连续的可用性是一个很朴素的需求。

本地部署还可以解决数据安全问题。大模型爆火之初就屡屡传出某某公司不慎泄露数据的新闻。没办法,用 ChatGPT 做简报、检查代码等等确实很香啊,但前提是得把文档上传到云端。这就导致许多企业一刀切禁止员工使用 ChatGPT。后来的事情就是许多企业选择利用开源大模型和内部数据训练、微调私有的大模型,并部署在自有的服务器或云主机上。更进一步的,现在我们看到规模 200 亿参数的大模型可以部署在基于酷睿 Ultra 处理器的 AI PC 上。

这种部署在 AI PC 上的大模型已经涉及教育、法律、医学等多个垂直领域,可以生成包括知识图谱、合同、法律意见等。譬如,将案情输入中科创达的魔方智能法务助手,就可以进行案情分析,查找相关的法律条文,撰写法律文书等。在这个场景中,很显然案情的隐私是应该绝对保证的,律师不敢将这种文档传输到云端处理。医生也有类似的约束,基于病例、基因数据等进行课题研究,如果能够在 PC 上做基因靶点、药理分析等,就不必采购服务器或者部署私有云了。

顺便一提的是,AI PC 上的大模型还让训练变得比想象中要简单,把本地你能看到的文件“喂”给 AI 助理之类的就可以了。这就解决了以往聊天机器人那种活只干了一半的“正确的废话”。譬如,通过 AI 生成一个报价邮件模板是很轻松的,但是,一般来说价格这种关键信息,机器人不懂那是很正常的事情,所以需要人工进行完善。如果找一个人类来处理这种事情,那提前做一份价格表是合理要求吧?报价表、FAQ 等都是属于需要总结提炼的工作,然后才能更有效率地培训新人——这是传统观念。本地的 AI 可以让这个事情变得很简单:让它去读 Outlook 邮箱就好了,片刻之后它自己就从历史邮件中“学”到对应的报价。相应生成的邮件就不仅是模版级了,而是要素完善的,留给我们做的就只剩确认 AI 给的结果是否正确。而且这种学习成果是可以继承下来的。

三大 AI 引擎撑起本地大模型

信息时代,我们已经经历了几次重大的科技变革。首先是个人电脑的普及,然后是互联网的普及,再就是移动互联网。现在我们正在面对的是 AI 对生产力的赋能甚至重构。我们今天讲的 AI 不是在数据中心里做训练或者推理的大规模集群,而是手边的 PC。AIGC、视频制作等面向内容创作者的应用已经不断给予大众诸多震撼了。现在我们进一步看到的是 AI PC 已经可以实实在在的提升普通白领的工作效率:处理琐碎事务,做简报、写邮件、查找法条等等,并且无缝衔接式地补齐我们的一些技能短板,类似于应用我们原本并不熟悉的的 Excel 功能、制作原以为高大上的知识图谱,诸如此类。这一切当然不仅仅依赖于大语言模型的“智能涌现”,也需要足够强大的性能以支撑本地部署。

我们多次提到的大模型的“本地部署”,都离不开端侧强劲的 AI 算力。所谓的 AI PC,依靠的是酷睿 Ultra 处理器强悍的 CPU+GPU+NPU 三大 AI 引擎,其算力足够支持 200 亿参数的大语言模型在本地运行推理过程,至于插图级的文生图为代表的 AIGC 应用相对而言倒是小菜一碟了。

- CPU 快速响应:CPU 可以用来运行传统的、多样化的工作负载,并实现低延迟。酷睿 Ultra 采用先进的 Intel 4 制造工艺,可以让笔记本电脑拥有多达 16 个核心 22 个线程,睿频可高达 5.1GHz。

- GPU 高吞吐量:GPU 非常适合需要并行吞吐量的大型工作负载。酷睿 Ultra 标配 Arc GPU 核显,酷睿 Ultra 7 165H 包含 8 个 Xe-LPG 核心(128 个矢量引擎),酷睿 Ultra5 125H 包含 7 个。而且,这一代核显还支持 AV1 硬编码,可以更快速地输出高质量、高压缩率的视频。凭借领先的编解码能力,Arc GPU 确实在视频剪辑行业积累的良好的口碑。随着矢量引擎能力的大幅度提升,大量内容创作 ISV 的演示了基于 AI PC 的更高效率的智能抠像、插帧等功能。

- NPU 优异能效:酷睿 Ultra 处理器全新引入的 NPU(神经处理单元)能够以低功耗处理持续存在、频繁使用的 AI 工作负载,以确保高能效。譬如,火绒演示了利用 NPU 算力接管以往由 CPU 和 GPU 承担的病毒扫描等工作,虽然速度较调用 GPU 略低,但能耗有明显的优势,特别适合安全这种后台操作。我们已经很熟悉的视频会议中常用的美颜、背景更换、自动居中等操作,也可以交给 NPU 运行。NPU 也完全有能力仅凭一己之力运行轻量级的大语言模型,例如 TinyLlama 1.1,足以满足聊天机器人、智能助手、智能运维等连续性的业务需求,而将 CPU 和 GPU 的资源留给其他业务。

针对商用 AI PC,英特尔还推出了基于英特尔® 酷睿™ Ultra 的 vPro® 平台,将 AI 和商用平台的生产力、安全性、可管理性和稳定性有机结合。博通展示的基于 vPro 的 AI PC 智能化管理将传统的资产管理从被动变为主动:以往只能看到设备是否“还在”、“能用”,补丁升级等操作也是计划内的;而 AI 加持的 vPro 可以自主分析设备的运行,从中发现隐患并自动匹配相应的补丁包、向运维人员推送建议等。贝锐向日葵有一个AI智能远控报告方案,对 PC 的远程监控不再仅仅是录屏、截屏,而是可以自动、实时地识别和生成电脑的远程工作记录,包括标记一些敏感操作,如删除文件、输入特定的指令等。这也明显减轻了运维人员检查、回溯记录的工作量。

未来已来:数以百计的 ISV 实际业务落地

亨利福特曾经这样评价汽车的发明:“如果你问你的顾客需要什么,他们会说需要一辆更快的马车。”

“更快的马车”是一种消费陷阱,认为 AI 手机、AI PC 只是噱头的人们可能只是基于惯例认为自己暂时不需要更新马车。更深层次的,是大众对 AI 的落地有一些误解,表现为两种极端:一种极端是认为那是新潮前卫的重度用户、旗舰配置的事情,典型的场景是图像视频处理等;另一种极端是觉得是耳目一新的聊天机器人,类似于强化版的搜索引擎,有更好,无亦可。但实际上,AI PC 的落地情况远超许多人的想象:对于商用客户而言,英特尔与全球超过 100+ 个 ISV 深度优化合作,本土 35+ISV 在终端优化融合,创建包含 300 多项 ISV 特性的庞大 AI 生态系统,带来规模空前的 AI PC 体验!

而且,我并不认为这个数量级的 AI 应用落地是画饼或者“战未来”。因为在我眼里,诸多 AI PC 解决方案的展示,宛如 “OpenVINO™ 联欢会”。OpenVINO™ 是英特尔开发的跨平台深度学习工具包,意即“开放式视觉推理和神经网络优化”。这个工具包其实在 2018 年就已经发布,数年来已经积累了大量计算视觉和深度学习推理应用,发展到 Iris Xe 核显时期,软件、硬件的配合就已经很有江湖地位了。譬如依托成熟的算法商店,基于 11 代酷睿平台可以很轻松的构建各式各样的 AI 应用,从智慧安防的行为检测,到店铺自动盘点,效果相当的好。现在,AI PC 的核显进化到 Xe-LPG,算力倍增,OpenVENO™ 积累的各式应用本身就会有更好的表现,可以说“地利”(具有延续性的 Xe 引擎)和“人和”(OpenVINO™ 的 ISV 资源)早就是现成的。

真正引爆 AI PC 的是“天时”,也就是大语言模型步入实用化。大语言模型的突破很好地解决了自然语言交互和数据训练的问题,极大地降低了普通用户利用 AI 算力的门槛。前面我举了很多嵌入办公应用的例子,在这里,我可以再举一个例子:科东智能控制器的多模态视觉语言模型与机械臂的结合。机械臂是司空见惯的机器人应用,早就可以结合机器视觉做各种操作,移动、分拣物品等等。但物品的识别和操作,传统上是是需要预训练和编程的。结合大语言模型后,整套系统就可以做多模态的指令识别与执行了,譬如我们可以说:把手机放到那张纸上面。在这个场景中,我们不再需要教会机器人手机是什么、纸是什么,不需要给具体的坐标,不需要规划移动的路径。自然语言的指令,摄像头的图像,这些多模态的输入被很好地融合,并自行生成了执行指令给机械臂。对于这样的工业场景,整套流程可以在一台笔记本电脑等级的算力平台上完成,数据不需要出厂。

所以,AI PC 给我们带来的,绝对不仅仅是“更快的马车”,而是颠覆了 PC 的使用模式,拓展了用户的能力边界。盘点已有的 ISV 与解决方案,我们可以将 AI PC 的应用总结为六大场景:

- Al Chatbot:针对特定行业和领域更加专业的问答。

- AI PC 助理:直接对 PC 操作,处理个人文件、照片、视频等。

- Al Office 助手:Office 插件,提升办公软件使用效率。

- AI 本地知识库:RAG(Retrieval Augmented Generation,检索增强生成)应用,包括各类文本和视频文件。

- AI 图像视频处理:图像、视频、音频等多媒体信息的生成与后期处理。

- AI PC 管理:更加智能高效的设备资产及安全管理。

小结

不可否认,AI 的发展永远离不开硬件与软件的技术创新、相互结合,基于酷睿 Ultra 的 AI PC 首先是更快、更强、更低功耗、更长待机的 PC,这些硬件特性支撑的 AI 应用对我们的使用体验、使用模式带来了更深刻的改变。获得“智能涌现”加持的 PC 不再仅仅是生产力工具,在某些场景中,它直接可以化身协作者甚至操作者。这背后既有微架构和生产工艺提升带来的性能改进,也有大语言模型等新质生产力的赋能。

如果我们将 CPU、GPU、NPU 视作是 AI PC 的三大算力,相应的,也可以将 AI PC 让 AI 本地化(端侧)落地的价值归纳为三大法则:经济、物理、数据保密。所谓经济,是数据在本地处理可降低云服务成本,优化经济性;物理则对应云资源的“虚”,本地 AI 服务可以提供更好的及时性,更高的准确性,避免了云与端之间的传输瓶颈;数据保密,是指用户数据完全留在本地,防止滥用和泄露。

在 2023 年,大语言模型的狂飙成就了云端的 AI 元年。2024 年,大语言模型的端侧落地开启了 AI PC 元年。我们也期待 AI 在云与端的交织发展当中不断夯实应用,源源不绝地释放强大生产力;更期待英特尔未来联合 ISV+OEM 共同发力,为我们提供更加强劲的“新质生产力”。