Table of Content

- Is coding still worth learning in 2024?

- Is AI replacing software engineers?

- Impact of AI on software engineering

- The problem with AI-generated code

- How AI can help software engineers

- Does AI really make you code faster?

- Can one AI-powered engineer do the work of many?

- Future of Software Engineering

- Reference

Credits: this post is a notebook of the key points from YouTube Content Creator Programming with Mosh's video with some editorial works. TL,DR,: watch the video.

Is coding still worth learning in 2024?

This can be a common question for a lot of people especially the younger generation of students when they try to choose a career path with some kind of insurance for future incomings.

People are worried that AI is going to replace software engineers, or any engineer related to coding and designs.

As you know, we should trust solid data instead of media and hearsay in the digital area. Social media have been creating this anxious feeling that every job is going to collapse because of AI. Coding has no future.

But I’ve got a different take backed up by real-world numbers as follows.

Note: In this post, “software engineer” represents all groups of coders (data engineer, data analyst, data scientist, machine learning engineer, frontend/backend/full-stack developers, programmers and researchers).

Is AI replacing software engineers?

The short answer is NO.

But there is a lot of fear about AI replacing coders. Headling scream robots taking over jobs and it can be overwhelming. But the truth is:

AI is not going to take you jobs; instead it is the People who can work with AI will have the advantage, and probabley will take your job.

Software engineering is not going away at least not anytime soon in our generation. Here are some data to back this up.

The US Bureau of Labor and Statistics (BLS) is a government agency that tracks job growth across the country on its website. From the data, we see that there is a continued demand for software developers, and computer and information scientists.

They claimed that the requirement for software developers is expected to grow by 26% from 2022 to 2032, while the average across all occupations is only 3%. This is a strong indication that software engineering is here to stay.

In our lives, the research and development conducted by computer and information research scientists turn ideas into technology. As demand for new and better technology grows, demand for computer and information research scientists will grow as well.

There is a similar trend for Computer and Information Research Scientists, which is expected to grow by 23% from 2022 to 2032.

Impact of AI on software engineering

To better understand the impact of AI on software engineering, let’s do a quick revisit of the history of programming.

In the early days of programming, engineers wrote codes in a way that only the computer understood. Then, we create compilers, we can program in a human-readable language like C++ and Jave without worrying about how the code should eventually get converted into zeros and ones, and where it will get stored in the memory.

Here is the fact

Compilers did not replace programmers. They made them more efficient!

Since then we have built so many software applications and totally changed the world.

The problem with AI-generated code

AI will likely do the same as changing the future, we will be able to delegate routine and repetitive coding tasks to AI, so we can focus on complex problem-solving, design and innovation.

This will allow us to build more sophisticated software applications most people can not even imagine today. But even then, just because AI can generate code doesn’t mean we can or we should delegate the entire coding aspect of software development to AI because

AI-Generated Code is Lower-Quality, we still need to review and refine it before using it in the production.

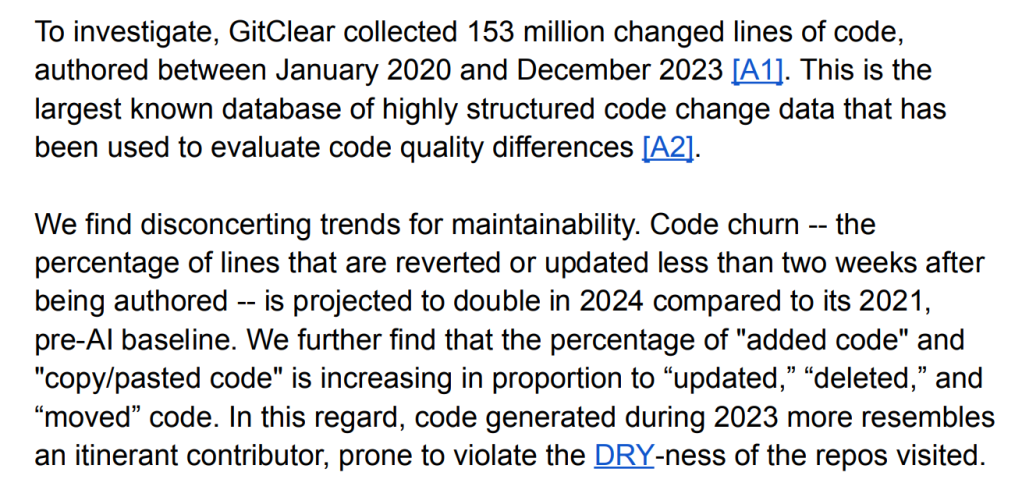

In fact, there is a study to support this: Coding on Copilot: 2023 Data Suggests Downward Pressure on Code Quality. According to this study, they collected 153M lines of code from 2020 to 2023 and found disconcerting trends for maintainability: Code churn will be doubled in 2024.

Code Quality

So, yes, we can produce more code with AI. but

More Code != Better Code

Humans should always review and refine AI-generated code for quality and security before deploying it to production. That means all the coding skills that software engineer currently has will continue to stay relevant in the future.

You still need the knowledge of data structure and algorithms programming languages and their tricky parts, tools and frameworks, you still need to have all that knowledge to review and refine the AI-generated code, you will just spend less time typing it into the computer.

So anyone telling you that you can use natural language to build software without understanding anything about coding is out of touch with the reality of software engineering (or he is trying to sell you something, i.e., GPUs).

source: NVIDIA CEO: No Need To Learn Coding, Anybody Can Be A Programmer With Technology

How AI can help software engineers

Of course, you can make a dummy app with AI in minutes, but this is not the same kind of software that runs our banks, transportation, healthcare, security and more. These are the software/systems that really matter, and our life depends on them. We can’t let a code monkey talk to a chatbot in English and get that software built. At least, this will not happen in our lifetime.

In the future, we will probably spend more time designing new features and products with AI instead of writing boilerplate code. We will likely delegate aspects of coding to AI, but this doesn’t mean we don’t need to learn to code.

As a software engineer or any coding practitioner, you will always need to review what AI generates and refine it either by hand or by guiding the AI to improve the code.

Keep in mind that Coding is only one small part of a software engineer’s job, we often spend most of our time talking to people, understanding requirements, writing stories, discussing software/system architecture, etc.

Does AI really make you code faster?

AI can only boost our programming productivity but not necessarily the overall productivity.

In fact, McKinsey’s report, Unleashing Developer Productivity with Generative AI, found that for highly complex tasks developers saw less than 10% improvement in their speed with generative AI supports.

As you can see, AI helped the most with documentation and code generation to some extent, but when moving to code refactoring, the improvement dropped to 20% and for high-complexity tasks, it was less than 10%.

Time savings shrank to less than 10 percent on tasks that developers deemed high in complexity due to, for example, their lack of familiarity with a necessary programming framework.

Thus, if anyone tells you that software engineers will be obsolete in 5 years, they are either ignorant or trying to sell you something.

In fact, some studies tell that the role of software engineers (coders) may become more valuable as they will be needed to develop, manage and maintain these AI systems.

They (software engineers) need to understand all the complexity of building software and use AI to boost their productivity.

Can one AI-powered engineer do the work of many?

Now, people are worried that one Senior Engineer can simply use AI to replace many Engineers, eventually, leaving no job opportunities for juniors.

But again this is a fallacy because the time saving you get from AI is not as great as you are promised in reality. Anyone who uses AI to generate code knows that. It takes effort to get the right prompts for usable results, and the code still needs polishing.

Thus, it is not like one engineer will suddenly have so much free time to do the job of many people.

But you may ask, this is now, what about the future? Maybe in a year or two, AI will start to build software like a human.

In theory, yes, AI is advancing and one day it may even reach and surpass human intelligence. But Einstein said:

In Theory, Theory and Practice are the Same.

In Practice, they are NOT.

The reality is that while machines may be able to handle repetitive and routine tasks, human creativity and expertise will still be necessary for developing complex solutions and strategies.

Software engineering will be extremely important over the next several decades. I don’t think it is going away in the future, but I do believe it will change.

Future of Software Engineering

Software powers our world and that will not change anytime soon.

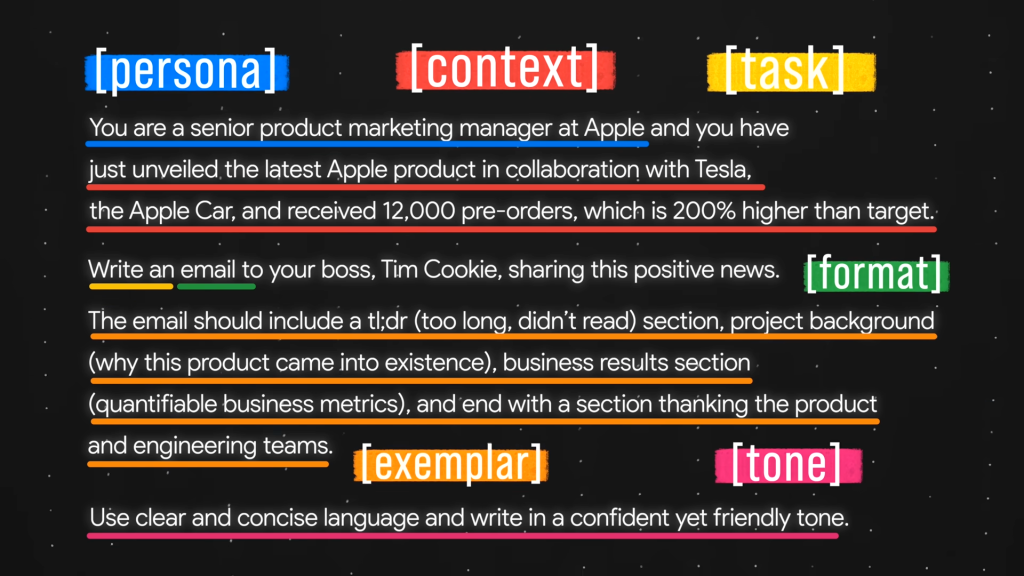

In future, we have to learn how to input the right prompt into our AI tools to get the expected result. This is not an easy skill to develop, it requires problem-solving capability as well as programming knowledge of languages and tools. So, if you’ve already made up your mind and don’t want to invest your time in software engineering or coding. That’s perfectly fine. Follow your passion!

The coding tools will evolve as they always do, but the true coding skill lies in learning and adapting. The future engineer needs today’s coding skills and a good understanding to use AI effectively. The future brings more complexity and demands more knowledge and adaptability from software engineers.

If you like building things with code, and if the idea of shaping the future with technology gets you excited, don’t let negativity and fear of Gen-AIs hold you back.

Reference

- 2024-04-17 Programming with Mosh: Is Coding Still Worth Learning in 2024?

- 2024-01-16 GitClear: Coding on Copilot: 2023 Data Suggests Downward Pressure on Code Quality

- 2023-06-27 McKinsey Digital: Unleashing developer productivity with generative AI

- US Bureau of Labor and Statistics (BLS): Fastest Growing Occupations; Computer and Information Research Scientists; Software Developers, Quality Assurance Analysts, and Tester