- AI Assitant Summary

- Interface with LLM

- The Mysterious In-Context Learning

- Magical Instruct understanding

- In Context Learning & Instruct Connection

- What’s Next?

AI Assitant Summary

The post first discusses different interface technologies used to connect people with language models. These include zero-shot prompting, few-shot prompting, in-context learning, and instruction. It explains the differences between zero-shot and few-shot learning and their advantages and limitations.

Next, it explores the concept of in-context learning, where language models can predict new examples by looking at existing ones without changing their parameters. It compares in-context learning with fine-tuning and highlights the differences between the two approaches.

The post then focuses on instructing understanding, dividing it into two categories: research-oriented and human/customer needs-oriented instruction. It emphasizes the importance of considering actual user needs in instruct-based tasks.

Lastly, it suggests a possible connection between in-context learning and instruction, proposing that language models could generate task descriptions based on real task instances. It mentions a study that shows improved performance when using instruction derived from this method.

Interface with LLM

Generally, the interface technologies between people and LLM that we often mention include zero-shot prompting, few-shot prompting, In-Context Learning, and instruction. These are actually ways of describing a specific task. But if you look at the literature, you will find that the names are quite confusing.

Zero-shot learning simply feeds the task text to the model and asks for results.

Text: i'll bet the video game is a lot more fun than the film.

Sentiment:Few-shot learning presents a set of high-quality demonstrations, each consisting of both input and desired output, on the target task. As the model first sees good examples, it can better understand human intention and criteria for what kinds of answers are wanted. Therefore, few-shot learning often leads to better performance than zero-shot. However, it comes at the cost of more token consumption and may hit the context length limit when the input and output text are long.

Text: (lawrence bounces) all over the stage, dancing, running, sweating, mopping his face and generally displaying the wacky talent that brought him fame in the first place.

Sentiment: positive

Text: despite all evidence to the contrary, this clunker has somehow managed to pose as an actual feature movie, the kind that charges full admission and gets hyped on tv and purports to amuse small children and ostensible adults.

Sentiment: negative

Text: for the first time in years, de niro digs deep emotionally, perhaps because he's been stirred by the powerful work of his co-stars.

Sentiment: positive

Text: i'll bet the video game is a lot more fun than the film.

Sentiment:Among them, Instruct is the interface method of ChatGPT, which means that people give descriptions of tasks in natural language, such as

Translate this sentence from Chinese to English:

....“Zero-shot prompting” used to be called “zero-shot” in the past, but now it’s commonly referred to as “Instruct.” The two terms have the same meaning but there are two different methods involved.

When interacting with instruction models, we should describe the task requirement in detail, trying to be specific and precise and avoiding saying “not do something” but rather specify what to do.

Please label the sentiment towards the movie of the given movie review. The sentiment label should be "positive" or "negative".

Text: i'll bet the video game is a lot more fun than the film.

Sentiment:Explaining the desired audience is another smart way to give instructions. For example to produce educational materials for kids, and safe content,

Describe what is quantum physics to a 6-year-old.

.. in language that is safe for work.

In-context instruction learning (Ye et al. 2023) combines few-shot learning with instruction prompting. It incorporates multiple demonstration examples across different tasks in the prompt, each demonstration consisting of instruction, task input, and output. Note that their experiments were only on classification tasks and the instruction prompt contains all label options.

Definition: Determine the speaker of the dialogue, "agent" or "customer".

Input: I have successfully booked your tickets.

Ouput: agent

Definition: Determine which category the question asks for, "Quantity" or "Location".

Input: What's the oldest building in US?

Ouput: Location

Definition: Classify the sentiment of the given movie review, "positive" or "negative".

Input: i'll bet the video game is a lot more fun than the film.

Output:

In the early days, people would attempt to express a task by using different words or sentences, continually refining their approach. This method was effective for fitting the training data, without considering the distribution. The current approach is to give a specific command statement and aim for the language model to understand it. Both methods involve expressing tasks, but the underlying ideas behind them are distinct.

In Context Learning and few-shot prompting have a similar meaning. They both involve providing examples to a language model and using them to solve new problems.

In my opinion, In Context Learning can be seen as a specific task, while Instruct is a more abstract method of describing tasks. However, the usage of these terms can be confusing, and this understanding is just my personal opinion. Therefore, I will only discuss In Context Learning and Instruct here, and no longer mention zero-shot and few-shot anymore.

The Mysterious In-Context Learning

If you think about it carefully, you will find that In Context Learning is a very magical technology. What’s so magical about it?

The magic is that when you provide LLM with several sample examples {<x1,y1>, <x2, y2>, …, <xn, yn> }, and then give {<x_n+1>} to it, LLM can successfully predict the corresponding ones {<y_n+1>}.

When you hear this, you might ask: What’s so magical about this? Isn’t that how fine-tuning works? If you ask this, it means you haven’t thought deeply enough about this issue.

Fine-tuning and In Context Learning both seem to provide some examples to LLM, but they are qualitatively different (refer to the figure above): Fine-tuning uses these examples as training data and uses backpropagation to modify LLM. The model parameters and the action of modifying the model parameters reflect the process of LLM learning from these examples.

However, In Context Learning only took out examples for LLM to take a look at, and did not use backpropagation to modify the parameters of the LLM model based on the examples, and asked it to predict new examples. Since the model parameters have not been modified, this means that it seems that LLM has not gone through a learning process. If it has not gone through a learning process, then why can it predict new examples just by looking at it?

This is the magic of In Context Learning. Does this remind you of a lyric: “Just because I took one more look at you in the crowd, I can never forget your face again.” The song is called “Legend”. Are you saying it is legendary or not?

It seems that In Context Learning does not learn knowledge from examples. In fact, does LLM learn strangely? Or is it true that it didn’t learn anything? The answer to this question is still an unsolved mystery. Some existing studies have different versions, and it is difficult to judge which one tells the truth. Some research conclusions are even contradictory.

Here are some current opinions. As for who is right and who is wrong, you can only decide for yourself. Of course, I think pursuing the truth behind this magical phenomenon is a good research topic.

Rethinking the Role of Demonstrations: What Makes In-Context Learning Work? try to prove In Context Learning is not learning a mapping between x and y.

It was discovered that in the sample examples {<xi, yi>} provided to LLM, it does not actually matter whether the corresponding correct answer is yi. If we replace the correct answer with another random answer, this does not affect the effect of In Context Learning.

What really has a greater impact on In Context Learning is the distribution of x and y, that is, the distribution of the input text x and the candidate answers y. If you change these two distributions, for example, replace y with something other than the candidate answer. , then the In Context Learning effect drops sharply.

In short, this work proves that In Context Learning does not learn the mapping function, but the distribution of input and output is very important, and these two cannot be changed randomly.

Magical Instruct understanding

We can regard “Instruct” as a task description that is convenient for human beings to understand. Under this premise, the current research on “Instruct” can be divided into two types: “Instruct” which is more academic research, and “Instruct” which describes human real needs.

Fine-tuned Language Models Are Zero-Shot Learners (FLAN)

Type 1: Academic Research Oriented Instruct

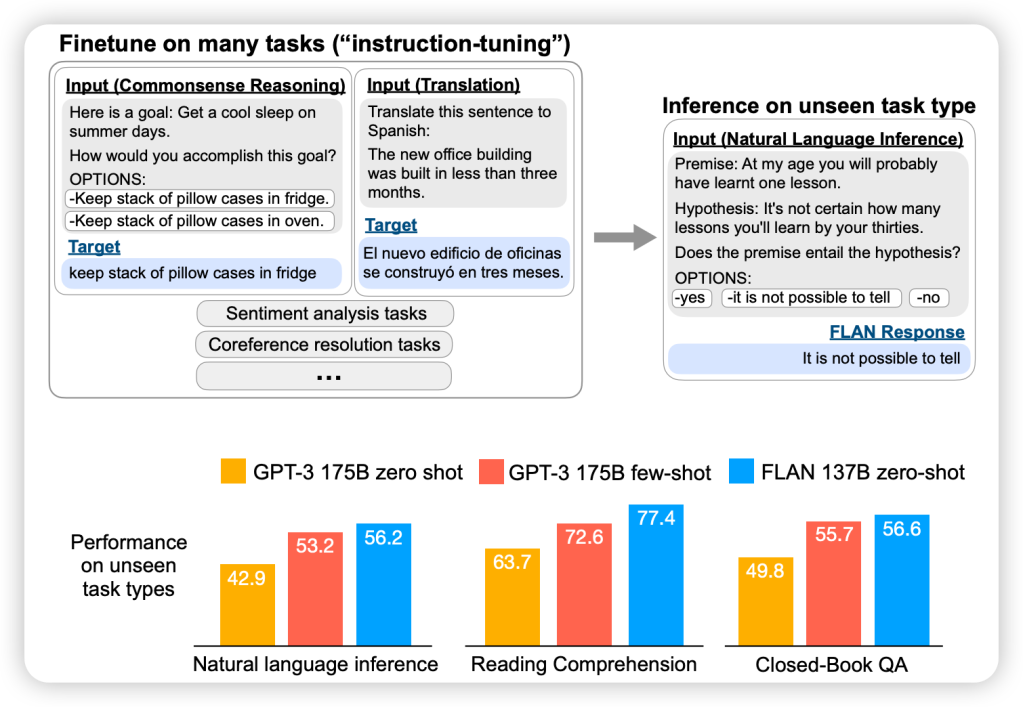

Let’s look at the first type “Instruct” which is more academically research-oriented. Its core research theme is the generalization ability of the LLM model to understand “Instruct” in multi-task scenarios.

As shown in the FLAN model in the figure above, that is to say, there are many NLP tasks. For each task, the researchers construct one or more Prompt templates as the Instruct of the task and then use training examples to fine-tune the LLM model so that LLM can learn multiple tasks at the same time. task.

After training the model, give the LLM model instruction for a brand-new task that it has never seen before, and then let LLM solve the zero-shot task. Based on whether the task is solved well enough, we can judge whether the LLM model has the generalization ability to understand the Instruct.

Research findings suggest several factors that can significantly enhance the generalization capabilities of the Language Models Instruction (LLM). To augment the model’s instructional comprehension, the following strategies have proven effective: increasing the number of multi-tasking tasks, expanding the size of the LLM model, implementing CoT Prompting, and diversifying the range of tasks. By incorporating these measures, the LLM model can substantially improve its capacity to understand instructions.

Type 2: Human/Customer Needs Orented Instruct

The second type is instruction based on real human needs. This type of research is represented by InstructGPT and ChatGPT. This type of work is also based on multi-tasking, but the biggest difference from academic research-oriented work is that it is oriented to the real needs of human users.

Why do you say that? Because the task description prompts they use for LLM multi-task training are sampled from real requests submitted by a large number of users, instead of fixing the scope of the research task and then letting researchers write the task description prompts.

The so-called “real needs” here are reflected in two aspects: first, because they are randomly selected from the task descriptions submitted by users, the types of tasks covered are more diverse and more in line with the real needs of users; second, a certain prompt description of a task is submitted by the user and reflects what ordinary users would say when expressing task requirements, not what you think users would say. Obviously, the user experience of the LLM model modified by this type of work will be better.

In the InstructGPT paper, this method is also compared with the Instruct-based method of FLAN. First, fine-tune the tasks, data, and Prompt template mentioned by FLAN on GPT3 to reproduce the FLAN method on GPT3, and then compare it with InstructGPT. Because the basic model of InstructGPT is also GPT3, there are only differences in data and methods. The two are comparable, and it is found that the effect of the FLAN method is far behind InstructGPT.

So what’s the reason behind it? After analyzing the data, the paper believes that the FLAN method involves relatively few task fields and is a subset of the fields involved in InstructGPT, so the effect is not good. In other words, the tasks involved in the FLAN paper are inconsistent with the actual needs of users, which results in insufficient results in real scenarios. This means that it is very important to collect real needs from user data.

In Context Learning & Instruct Connection

If we assume that In Context Learning uses some examples to concretely express task commands, Instruct is an abstract task description that is more in line with human habits.

So, a natural question is: is there any connection between them? For example, can we provide LLM with several specific examples of completing a certain task and let LLM find the corresponding Instruct command described in natural language? (aka, Can LLM create the instruct command for itself by watching the human involved process)

There’s actually some work being done on this issue here and there, and I think it’s a really interesting research direction.

Let’s talk about the answer first. The answer is: Yes, LLM can.

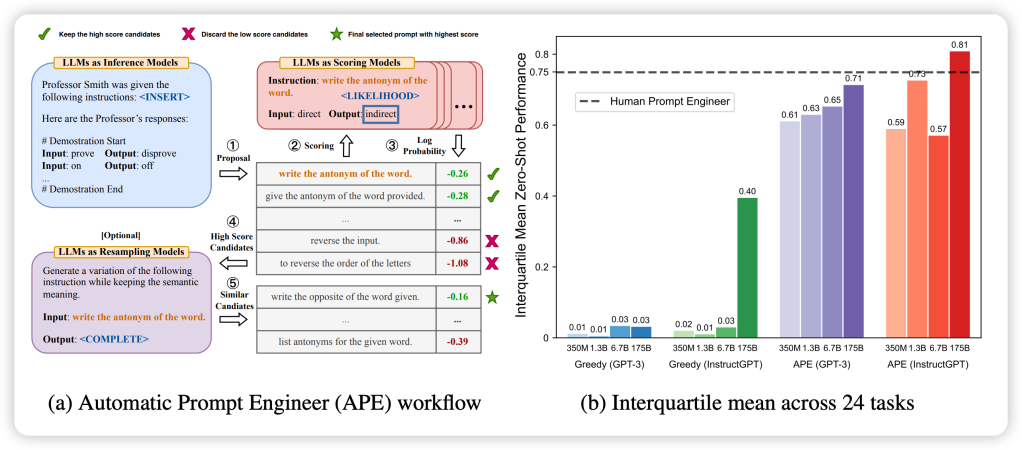

Large Language Models are Human-Level Prompt Engineers is a very interesting job in this direction.

As shown in the figure above, for a certain task, give LLM some examples, let LLM automatically generate natural language commands that can describe the task, and then it use the task description generated by LLM to test the task’s effectiveness.

The basic models it uses are GPT 3 and InstructGPT. After the blessing of this technology, the effect of Instruct generated by LLM is greatly improved compared to GPT 3 and InstructGPT which do not use this technology, and in some tasks Superhuman performance.

This shows that there is a mysterious inner connection between concrete task examples and natural language descriptions of tasks. As for what exactly this connection is? We don’t know anything solid conclusions about this yet.

What’s Next?

Technical Review 05: How to Enhance LLM’s Reasoning Ability

Previous Blogs:

- The Future of Coding: Will Generative AI Make Programmers Obsolete?

- Enigma – Mission X Challenge Accomplished with Python

- Prompt Engineering for LLM

- Technical Review 04: Human-Computer Interface from In-Context Learning to Instruct Understanding

- Technical Review 03: Scale Effects & What happens when LLMs get bigger and bigger